The most worrisome health challenges according to LLMs

What will the most worrisome global health challenge be in the next 30 years?

I asked ten Large Language Models the following question: “What will the most worrisome global health challenge be in the next 30 years?”. In this article, you can find their answers: if you do not wish to read the whole article, here is a summary of the most common challenges identified by all these models:

- Antimicrobial resistance

- Climate change

- Non communicable diseases

- Pandemics

- Inequalities (both in health and in society)

- Mental health

- Rising technologies ethical issues

I strongly advise you read at least the first and the last responses, as they are the most insightful ones.

GPT4 Turbo

Predicting the most significant global health challenges over the next 30 years involves considering current trends, emerging threats, and the changing global landscape. Here are several potential contenders:

-

Antimicrobial Resistance (AMR): As bacteria, viruses, and fungi evolve to resist existing treatments, AMR could become a formidable challenge, leading to a situation where common infections and minor injuries could once again become deadly. The World Health Organization (WHO) has already flagged AMR as one of the top 10 global public health threats facing humanity.

-

Climate Change: Health impacts related to climate change are expected to intensify, including heat-related illnesses, respiratory issues from poor air quality, and the spread of vector-borne diseases like malaria and dengue fever. Climate change also impacts food security and water availability, which in turn can lead to malnutrition and poor health.

-

Pandemics: The COVID-19 pandemic highlighted the global vulnerability to emerging infectious diseases. Future pandemics could arise from other novel viruses or known threats that evolve in new ways. The increasing interaction between humans and wildlife, along with global travel and urbanization, heightens this risk.

-

Non-communicable Diseases (NCDs): Diseases like diabetes, heart disease, and cancer are projected to increase, particularly in low- and middle-income countries. These diseases strain healthcare systems and are influenced by aging populations, lifestyle changes, and possibly by environmental factors.

-

Mental Health: Mental health issues are becoming increasingly recognized as critical components of global health. Issues like depression and anxiety impose significant disease burdens and are affected by numerous factors from societal changes to economic instability.

-

Health Inequities: Disparities in health outcomes between different groups within and across countries are likely to persist or worsen unless addressed. These disparities often arise from inequalities in social determinants of health such as income, education, employment, and access to healthcare.

-

Technological and Biosecurity Risks: Advances in technology such as synthetic biology and artificial intelligence offer tremendous potential benefits but also come with risks. Misuse, accidents, or unintended consequences could lead to new health crises.

Addressing these challenges will require coordinated global efforts, including investment in research, healthcare infrastructure, disease surveillance, and public health initiatives. Effective leadership and governance will also be critical in navigating the complex interplay of factors that influence global health.

REKA-CORE

Predicting the most worrisome global health challenge over the next 30 years involves considering several factors, including emerging diseases, climate change, antibiotic resistance, and changes in population demographics. Here are a few potential candidates:

-

Antimicrobial Resistance (AMR): The overuse and misuse of antibiotics, antivirals, and antifungals have led to the emergence of resistant strains of bacteria, viruses, and fungi. This could render many current treatments ineffective, leading to longer hospital stays, higher healthcare costs, and increased mortality rates from infectious diseases.

-

Climate Change and Health: Climate change is expected to have a profound impact on global health, exacerbating existing health challenges and creating new ones. This includes the spread of vector-borne diseases (like malaria and dengue fever) into new areas, increased frequency of heatwaves leading to heat stress and dehydration, and more severe natural disasters affecting public health infrastructure.

-

Non-Communicable Diseases (NCDs): As populations age and lifestyles change, NCDs such as cardiovascular diseases, cancer, diabetes, and chronic respiratory diseases are likely to become an even greater burden. These diseases are often linked to modifiable risk factors like diet, physical activity, tobacco use, and alcohol consumption, making prevention a complex challenge.

-

Emerging Infectious Diseases: The emergence of new infectious diseases (like COVID-19) and the re-emergence of previously controlled diseases pose ongoing threats. Global connectivity and population density can facilitate the rapid spread of diseases, making early detection, containment, and response critical.

-

Mental Health: The global burden of mental health disorders is expected to increase, influenced by factors such as social isolation, economic stress, and the impacts of climate change. Addressing mental health will require integrated approaches that go beyond traditional healthcare systems.

-

Access to Healthcare and Health Inequities: Global disparities in access to healthcare services, quality of care, and health outcomes are likely to remain a significant challenge. Ensuring equitable access to healthcare, especially in low-resource settings, will be crucial for addressing global health needs.

Each of these challenges presents unique difficulties and will require coordinated global efforts, innovative solutions, and significant investment to mitigate their impact on public health.

yi-34b-chat

Predicting the most worrisome global health challenge for the next 30 years is challenging due to the complex and dynamic nature of health threats. However, based on current trends and the potential impact of various factors, several issues stand out as likely to have a significant impact on global health:

-

Climate Change: Climate change is expected to exacerbate many health challenges, including the spread of vector-borne diseases, food and water insecurity, and heat-related illnesses.

-

Antimicrobial Resistance (AMR): The increasing resistance of bacteria, viruses, and parasites to existing drugs is a growing concern, potentially leading to a post-antibiotic era where common infections become untreatable.

-

Non-Communicable Diseases (NCDs): With the rise of chronic diseases like heart disease, cancer, diabetes, and respiratory diseases, the burden of NCDs is expected to increase, particularly in low- and middle-income countries.

-

Infectious Diseases: Emerging and re-emerging infectious diseases, such as COVID-19, Ebola, Zika, and multi-drug resistant tuberculosis, can rapidly become global health emergencies.

-

Mental Health: The prevalence of mental health conditions is increasing, and the impact of social, economic, and environmental changes is likely to exacerbate this trend.

-

Health Disparities: The gap in health outcomes between different populations, often linked to social determinants of health, is a persistent challenge that is likely to persist or worsen due to economic and social inequalities.

-

Healthcare Access and Affordability: As the population ages and the demand for healthcare increases, ensuring access to affordable, quality healthcare services will become increasingly critical.

-

Global Health Security: The ability of countries to prevent, detect, and respond to health emergencies will be crucial in an interconnected world.

-

Emerging Technologies: The integration of new technologies into healthcare, while potentially transformative, also raises ethical, legal, and social issues that need to be addressed.

-

Urbanization and Environmental Health: The health impacts of rapid urbanization, including air pollution and inadequate urban planning, will disproportionately affect vulnerable populations.

To prepare for and address these challenges, it will be essential for governments, international organizations, researchers, healthcare providers, and communities to work together to develop and implement sustainable health policies, strategies, and interventions. Adaptation, resilience, and innovation will be key in facing the evolving health threats of the future.

Gemini 1.5 PRO

Predicting the Most Worrisome Global Health Challenge in the Next 30 Years

Predicting the future of global health is a complex task, as it involves considering various interacting factors like evolving diseases, technological advancements, climate change, and socioeconomic trends. However, based on current knowledge and trends, here are some potential candidates for the most worrisome global health challenges in the next 30 years:

1. Antimicrobial Resistance (AMR):

- The Problem: The overuse and misuse of antibiotics have led to the emergence of drug-resistant bacteria, making infections increasingly difficult to treat. This could potentially lead to a post-antibiotic era where common infections become life-threatening.

- Why it’s Worrisome: AMR poses a significant threat to global health security, potentially undermining modern medicine and leading to increased mortality and morbidity from previously treatable infections.

- What can be done: Strategies to combat AMR include promoting responsible antibiotic use, investing in research and development of new antibiotics and alternative therapies, and strengthening surveillance systems.

2. Emerging and Re-emerging Infectious Diseases:

- The Problem: Factors like climate change, globalization, and ecological disruption increase the risk of new infectious diseases emerging and old ones re-emerging. Examples include zoonotic diseases (diseases transmitted from animals to humans) like COVID-19 and vector-borne diseases like Zika and Dengue.

- Why it’s Worrisome: These diseases can cause large-scale outbreaks and pandemics with significant health and economic consequences.

- What can be done: Strengthening global surveillance systems, investing in early detection and rapid response mechanisms, and promoting research on vaccines and treatments are crucial.

3. Non-Communicable Diseases (NCDs):

- The Problem: NCDs like heart disease, cancer, diabetes, and chronic respiratory diseases are already the leading causes of death globally. Their prevalence is expected to rise further due to aging populations, unhealthy lifestyles, and environmental factors.

- Why it’s Worrisome: NCDs place a significant burden on healthcare systems and economies, impacting individuals, families, and societies.

- What can be done: Promoting healthy lifestyles, implementing preventive measures, and ensuring access to affordable and quality healthcare services are essential to address NCDs.

4. Climate Change and Health:

- The Problem: Climate change impacts health directly through extreme weather events and indirectly by influencing factors like air pollution, water quality, and food security.

- Why it’s Worrisome: The health effects of climate change are expected to worsen, disproportionately affecting vulnerable populations and exacerbating existing health inequities.

- What can be done: Mitigation and adaptation strategies are crucial. This includes reducing greenhouse gas emissions, investing in climate-resilient healthcare systems, and supporting communities to adapt to climate change impacts.

5. Health Inequities:

- The Problem: Unequal access to healthcare services and disparities in health outcomes persist within and between countries.

- Why it’s Worrisome: Health inequities lead to unnecessary suffering and preventable deaths, hindering social and economic development.

- What can be done: Addressing social determinants of health, strengthening health systems, and promoting universal health coverage are essential to reducing health inequities.

In conclusion, while it’s difficult to predict with certainty, the next 30 years will likely see a complex interplay of these challenges. Addressing them will require a multi-faceted approach involving collaboration across sectors, sustained investment in research and innovation, and a commitment to global health equity.

CommandR Plus

It is difficult to predict with certainty what the most worrisome global health challenge will be in the next 30 years, as the field of health and healthcare is constantly evolving and new challenges can emerge unexpectedly. However, based on current trends and ongoing issues, some potential global health challenges that may cause concern in the next 30 years include:

-

Infectious diseases: The emergence and spread of new infectious diseases, such as COVID-19, could pose a significant threat to global health. As the world becomes more interconnected and global travel becomes easier, the risk of disease outbreaks spreading rapidly across borders increases. Additionally, the rise of antibiotic resistance and the evolving nature of pathogens could make treating and controlling infectious diseases more challenging.

-

Non-communicable diseases: Non-communicable diseases, such as heart disease, stroke, cancer, and diabetes, are already the leading causes of death and disability worldwide. In the next 30 years, the global burden of these diseases is expected to increase as populations age and risk factors such as obesity and physical inactivity become more prevalent. Addressing non-communicable diseases will require a multifaceted approach, including prevention, early detection, and improved access to healthcare.

-

Mental health: Mental health issues, such as depression, anxiety, and substance use disorders, are already a significant burden on global health, and their impact is expected to grow in the coming decades. Stigma and lack of access to mental health services are ongoing challenges that will need to be addressed to improve mental health outcomes.

-

Climate change: Climate change is expected to have significant impacts on global health in the coming decades. Extreme weather events, such as heat waves, floods, and droughts, can directly cause injuries and deaths, as well as contribute to the spread of infectious diseases and food and water insecurity. Additionally, climate change may lead to population displacement and migration, which can further strain healthcare systems and resources.

-

Healthcare access and inequality: Inequalities in access to healthcare are likely to persist and potentially worsen in the next 30 years, as the cost of healthcare continues to rise and healthcare resources become increasingly strained. This could lead to disparities in health outcomes between different populations and regions.

-

Emerging technologies: The rapid advancement of technology, such as artificial intelligence and gene editing, could also present new challenges and ethical dilemmas in the field of healthcare. While these technologies have the potential to revolutionize healthcare, they also raise complex questions about privacy, equity, and the potential for misuse.

It is important to note that these are potential challenges and the future of global health will depend on a variety of factors, including advancements in medical research, public health initiatives, and global collaboration. By identifying and addressing these challenges now, we can work towards building a healthier and more resilient future for all.

GPT-3.5

Predicting the most worrisome global health challenge over the next 30 years is complex and uncertain, as it can be influenced by various factors such as emerging diseases, environmental changes, antimicrobial resistance, and social disparities. However, some experts suggest that antimicrobial resistance could become a significant threat to global health in the coming decades. This is due to the overuse and misuse of antibiotics leading to the development of drug-resistant strains of bacteria, making infections more difficult to treat. Addressing this issue will require coordinated efforts from governments, healthcare providers, and the public to develop new treatment strategies and promote responsible antibiotic use.

im-also-a-good-gpt2-chatbot

Predicting the most worrisome global health challenge over the next 30 years is complex due to the dynamic nature of global health issues. However, several key challenges stand out based on current trends and projections:

Climate Change and Health Impacts

- Extreme Weather Events: Increased frequency and severity of heatwaves, hurricanes, floods, and droughts leading to direct health impacts.

- Vector-borne Diseases: Expansion of habitats for mosquitoes and ticks could increase the spread of diseases like malaria, dengue, and Lyme disease.

- Food and Water Security: Changes in agricultural productivity and water availability could exacerbate malnutrition and waterborne diseases.

Antimicrobial Resistance (AMR)

- Superbugs: The rise of drug-resistant bacteria, viruses, and fungi could render many existing treatments ineffective, making infections harder to treat and leading to higher morbidity and mortality rates.

- Global Spread: International travel and trade could facilitate the rapid spread of resistant pathogens.

Pandemics and Emerging Infectious Diseases

- Novel Pathogens: The risk of new zoonotic diseases spilling over into human populations remains high due to habitat encroachment, wildlife trade, and intensive agriculture.

- Preparedness and Response: Despite lessons from COVID-19, challenges in global coordination, equitable vaccine distribution, and health infrastructure remain.

Non-Communicable Diseases (NCDs)

- Chronic Diseases: Rising rates of heart disease, diabetes, cancer, and respiratory diseases due to aging populations, lifestyle factors, and urbanization.

- Mental Health: Growing burden of mental health disorders, particularly anxiety and depression, exacerbated by socioeconomic stressors and social isolation.

Health Inequities

- Access to Healthcare: Disparities in healthcare access between and within countries could worsen health outcomes.

- Socioeconomic Determinants: Poverty, education, and inequality will continue to impact health outcomes disproportionately.

Technological and Ethical Challenges

- Healthcare Technology: Balancing the benefits of digital health, AI, and genomic medicine with ethical concerns and equitable access.

- Data Privacy: Protecting patient data while harnessing big data for health insights.

Conclusion

Among these, climate change and its health impacts seem to be the most worrying due to their pervasive and compounding effects on other health challenges. For instance, climate change can exacerbate infectious disease outbreaks, impair food and water security, and increase the prevalence of chronic diseases through environmental stressors.

What Can Be Done?

- Global Collaboration: Strengthening international cooperation and institutions for coordinated responses.

- Investment in Health Systems: Building resilient health infrastructure.

- Research and Innovation: Fostering technological advancements and equitable access to treatments.

- Public Health Policies: Implementing proactive policies that address climate change, AMR, and emerging diseases.

Ultimately, the global health landscape will require adaptive, interdisciplinary, and collaborative approaches to navigate the challenges of the next 30 years.

Gemma-7B v1.1

1. Climate Change and its Health Impacts:

- Rising temperatures, extreme weather events, and air pollution will lead to increased heat-related illnesses, respiratory problems, mental health disorders, and displacement of populations.

2. Infectious Disease Emergence and Evolution:

- Increased antimicrobial resistance, globalization, and wildlife-human contact will facilitate the emergence and spread of novel infectious diseases.

- Rapidly evolving pathogens and changing disease patterns will pose ongoing threats to global health security.

3. Chronic Diseases:

- Aging populations, unhealthy diets, and sedentary lifestyles will contribute to a surge in chronic diseases such as heart disease, cancer, and diabetes.

- The burden of these diseases will strain healthcare systems and economies.

4. Mental Health and Substance Abuse:

- Increasing rates of depression, anxiety, and substance use disorders will pose significant health and social challenges.

- Access to mental health services and support will be a critical need.

5. Health Equity and Access:

- Disparities in healthcare access and outcomes will widen, leading to increased health inequities.

- Ensuring equitable access to quality healthcare will be a major global priority.

6. Antimicrobial Resistance:

- Continued overuse and misuse of antibiotics will accelerate the rise of antimicrobial resistance, leading to treatment failures and increased mortality.

7. Urbanization and Population Growth:

- Rapid urbanization and population growth in developing countries will strain healthcare systems and infrastructure.

- Air pollution, noise pollution, and overcrowding will contribute to health problems.

8. Occupational Health and Safety:

- Automation and technological advancements will create new jobs but also expose workers to new health risks.

- Ensuring safe and healthy working environments will be essential.

9. Social and Economic Factors:

- Economic inequality, social unrest, and political instability can negatively impact health outcomes.

10. Emerging Technologies:

- Rapid advancements in biotechnology and digital health will bring new opportunities but also potential risks to health privacy and access.

Qwen-32B-chat

As an AI language model, I cannot predict the future with certainty, but based on current trends and expert opinions, there are several global health challenges that could be particularly worrisome in the next 30 years:

-

Antimicrobial Resistance (AMR): The overuse and misuse of antibiotics have led to the emergence of drug-resistant bacteria, viruses, and other pathogens, making it harder to treat infections. AMR is already a significant problem, and if not addressed, it could lead to a global health crisis.

-

Non-Communicable Diseases (NCDs): Chronic conditions such as heart disease, cancer, diabetes, and respiratory diseases are on the rise globally and are expected to continue to be a major health challenge. They are often linked to lifestyle factors such as poor diet, lack of exercise, and smoking.

-

Pandemics and Emerging Infectious Diseases: The COVID-19 pandemic has demonstrated how quickly a new virus can spread globally, causing significant morbidity and mortality. Other infectious diseases, such as Ebola, Zika, and Nipah virus, have also emerged in recent years, highlighting the need for better preparedness and response.

-

Climate Change: Climate change is likely to have significant impacts on global health, including the spread of infectious diseases, increased air pollution, and extreme weather events that can disrupt health systems and exacerbate existing health disparities.

-

Mental Health: Mental health conditions are already a significant burden globally, and the stress and isolation caused by factors such as the COVID-19 pandemic, economic instability, and social unrest are likely to exacerbate this challenge.

It’s important to note that these challenges are interconnected and may have compounding effects on global health in the coming decades. Addressing them will require collaboration, innovation, and sustained investment in public health infrastructure and research.

Llama-3 70B instructed

What a timely and important question! Based on current trends, research, and expert opinions, I’ll outline some of the most pressing global health challenges that may emerge or continue to worsen over the next 30 years. While it’s difficult to pinpoint a single most worrisome challenge, I’ll highlight a few that could have significant impacts:

- Antimicrobial Resistance (AMR): The rise of antibiotic-resistant bacteria, viruses, and fungi may lead to a post-antibiotic era, where common infections become untreatable. This could result in millions of deaths, economic losses, and a significant burden on healthcare systems.

- Climate Change and Health: Climate change will likely exacerbate health issues like heat stress, vector-borne diseases (e.g., malaria, dengue), and water scarcity. It may also increase the spread of infectious diseases, as changing weather patterns alter ecosystems and facilitate the movement of disease-carrying insects.

- Pandemics and Infectious Diseases: The COVID-19 pandemic has shown us how quickly infectious diseases can spread globally. Future pandemics, such as those caused by influenza, SARS, or MERS, could have devastating consequences. The increasing frequency and severity of outbreaks are exacerbated by factors like global travel, urbanization, and antimicrobial resistance.

- Non-Communicable Diseases (NCDs): The rising prevalence of NCDs, such as diabetes, heart disease, and certain types of cancer, will continue to strain healthcare systems and economies. The increasing burden of NCDs in low- and middle-income countries may worsen health disparities and limit economic growth.

- Mental Health: Mental health issues, including depression, anxiety, and substance abuse, are already a significant concern. The World Health Organization (WHO) estimates that mental health disorders will become the leading cause of disability globally by 2030, with significant economic and social implications.

- Global Health Inequality: The growing gap in healthcare access, quality, and outcomes between high-income and low-income countries may lead to increased health disparities, social unrest, and economic instability.

- Healthcare Systems Overwhelm: The increasing demand for healthcare services, combined with aging populations, may overwhelm healthcare systems, leading to reduced quality of care, longer wait times, and increased costs.

- Dementia and Neurological Disorders: The projected rise in dementia cases, particularly in low- and middle-income countries, will pose significant challenges to healthcare systems, families, and economies.

- Vaccine Hesitancy and Misinformation: The growing trend of vaccine hesitancy, fueled by misinformation and misconceptions, may lead to outbreaks of preventable diseases, undermining decades of progress in global health.

- Healthcare Workforce Shortages: The projected shortage of healthcare professionals, including doctors, nurses, and other essential workers, may compromise healthcare quality and access, particularly in low-income countries.

While these challenges are interconnected and complex, addressing them will require collaborative efforts from governments, international organizations, healthcare professionals, and individuals. By prioritizing global health investments, research, and innovation, we can mitigate the impact of these challenges and create a healthier, more equitable world for all.

Conclusion

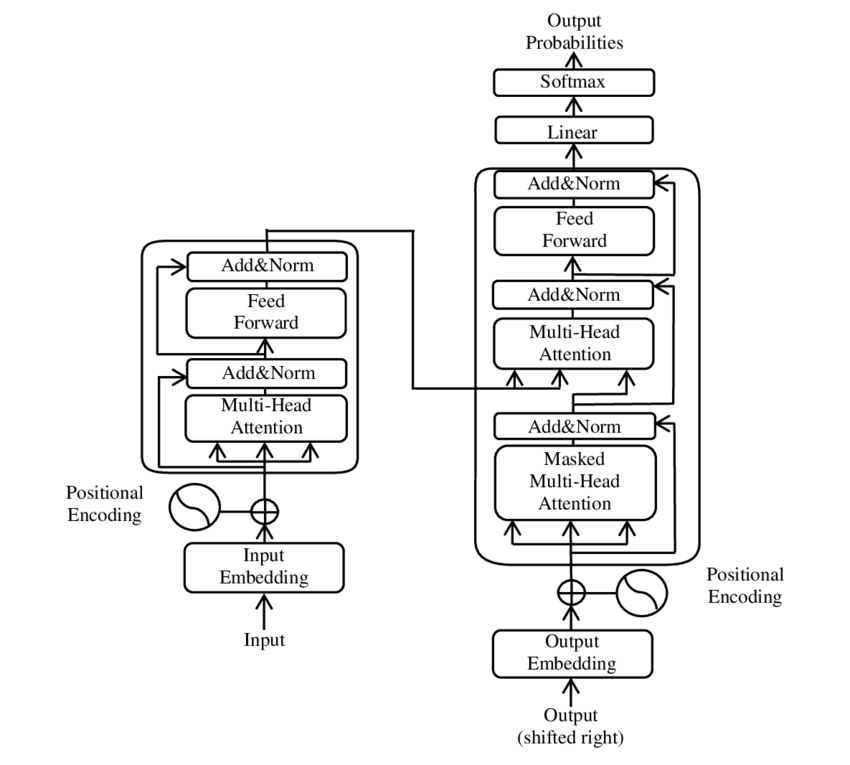

Now that we have read through all these answers, there are still two questions that crawls in our minds: can AI be used to advise us on future challenges and suggest solutions? Can we leverage the power of Artificial Intelligence models in order to build a better future for the coming generations?

These interrogatives should concern all of us, as it is today’s commitment that defines tomorrow’s success: let’s be proactive and try to find solutions, then, so that we will be able to give our contribution toward the improvement of humanity and Planet Earth!